Fast Query Engines

Search Engines return results in milliseconds. Millions of results in tenths of a second. Regardless of the number of websites online, search engines will scour the internet for the most relevant results for a search.

The way they do it, however, is ever-changing. In the beginning, search engines worked on literal matches. They literally matched the words you were using to perform your search with everything in their database. If it matched, it was included in the results; if not, it wasn’t.

When the number of sites started growing, search engines needed a new indicator to filter through the relevant matches. One of the chosen indicators was the number of links pointing to a website. The more they were, the more authority they gave it. But that only proved that quantity over quality doesn’t work in the context of web search.

What’s interesting to mention is that user experience – how much the user enjoyed the websites that were returned as results – did not matter at all. Things like loading speed or readability amounted for nothing in the eyes of the search engine.

1. Literal matches

In the early days of the world wide web, search engines operated on very basic algorithms. They acted as huge databases with efficient regular expression functions to return matches for what the user input in the search box. Search intent or the battle for ranking were not in the picture. In fact, one of the first search engines – JumpStation – returned results as it found them, with no ranking formula in place.

What this meant was that in the beginning, SEO – Search Engine Optimization – used to be all about three things:

- Keyword stuffing;

- Meta keywords;

- Keyword frequency.

1.1. Keyword stuffing

Since the earliest versions of search engines worked on matches, a website’s best chance to increase its visibility was to match as many keywords as possible. And in short, that’s what keyword stuffing is: cramming as many words and phrases (that users are known to use in their searches) as possible on a single page, in the effort to make it reachable from any searching starting point.

However, search engine developers soon discovered that pages that were marked as relevant by their algorithms were completely ignored by the users. That was because, while bots did not care about what they were “reading”, no human being would have stayed on a website just to read the same 10-15 terms exhaustingly repeated on the same page. Instead of optimizing for their human audience, websites optimized for bots.

Search engines’ algorithms were updated and keyword stuffing as an optimization strategy is now obsolete and penalized. There’s still no set-in-stone number for keyword density – some recommend keeping it between 1%-2%, and others go as high as 5%. More on that shortly.

1.2. Meta keywords

Only visible to search engines, meta keywords used to be the go-to technique to improve a website’s visibility. Webmasters used meta keywords to “tell” search engines what their website was about.

It’s not difficult to imagine, then, why meta keywords met their downfall quite soon after the keyword stuffing tactic hit the mainstream. Everyone was trying to trick the system by packing their website with loads of meta keywords; some weren’t even relevant to the website or the page itself anymore.

Plus, meta keywords are also visible in the source code of a page, which means that any competitor can snatch them in a swift copy-paste move. This leaves the original website vulnerable to ranking drops.

The giant in the field – Google – announced more than a decade ago that their search engine doesn’t even use meta keywords for ranking purposes. Even though a Google executive said that using them does not have a negative impact on rankings, the general consensus among SEO experts remains the same: get rid of all the meta keywords and never use them again.

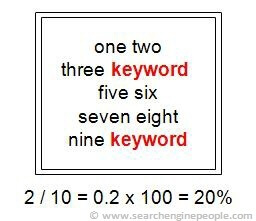

1.3. Keyword frequency

Keyword frequency – or keyword density – is basically a percentage that shows how many times a keyword or a phrase appears in a body of text, compared to the total number of words the text contains. While the formula to calculate it might seem pretty straightforward at first, there’s actually been a lot of debate and not a lot of consensus. Mathematical formulas are not the matter at hand right now, but you can find more information on Wikipedia.

As we mentioned earlier, there’s no magic number for foolproof keyword density. The main recommendation here is write for humans first, optimize for engines later. As discussed in the previous section about keyword stuffing, nobody wants to (or will, for that matter) read one single idea spread across several paragraphs, all wrapped around the same 5 terms. Plus, search engines have become smarter and smarter lately, and are no longer tolerating black-hat techniques meant to trick them.

2. Quantity before quality

2.1. Number of links as ranking signal

Similar to keywords, the start of the search engine era saw the number of links pointing to a page as a ranking signal. The more people talked about you, the more relevant you were. The authority of the websites that mentioned or linked towards you was not important. Whether it was a basic blog or the BBC Online Service (which, by the way, was launched in 1994), search engines did not see any difference.

Yet again, people found methods to shortcut their way to the first positions of a results page. If it didn’t matter where the links came from, that meant they could generate them themselves. And so PBNs (Private Blog Networks) were born. A PBN was a network of websites all focused on pushing a single website as high as possible on the results page. Needless to say, before long, the tactic was reprimanded and the algorithms were adjusted.

Unfortunately, using a PBN to manipulate rankings in the search results is still a common occurrence today, even though it’s more damaging than helpful, and sometimes seriously penalized.“PBN backlinks are a kiss of death to your website.”

But PBNs weren’t the only method of using this vulnerability regarding the number of links. Some other examples are:

- Link schemes (gathering links from wherever possible, regardless of relevancy, and sometimes paying for them);

- Website manipulation (creating mirror or useless sites with the sole purpose of making them link towards the same website);

- Invisible text and links (using links invisible to the human eye, but discoverable by search engines).

2.2. Number of searches and clicks as a ranking signal

Another ranking signal that bombed was the number of searches for a term and clicks on a specific result. In short, the amount of people who were searching for a certain keyword and the match they involuntarily made with a certain website by clicking on it informed search engines of the website’s relevancy for the term and for the public.

The more people searched for a term and clicked on a certain result, the more search engines understood that that was what they were looking for, and the more it showed said result in the result page. Quite logical, right?

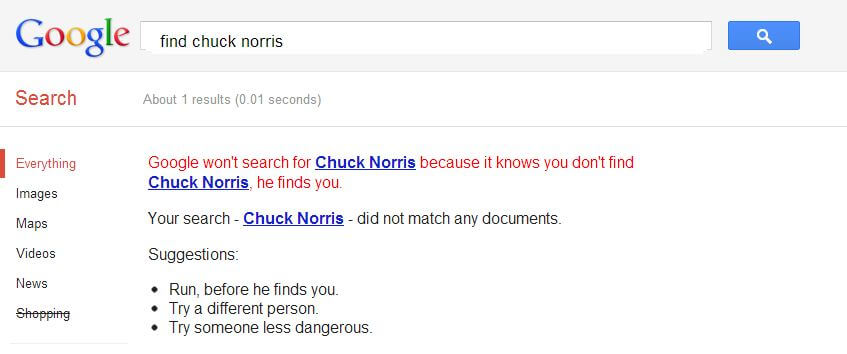

Well, no. Because people are endowed with something no bot possesses: a sense of humour. Google bombing – a technique of pushing an unrelated website or page to the first result page, simply for the sake of comedy or controversy – has led to a number of (mostly) hilarious situations.

A famous example is the Google look-alike website nochucknorris.com, which popped up whenever someone searched for “find Chuck Norris”. Based on the notorious Chuck Norris jokes, the website said that “Google won’t search for Chuck Norris because it knows you don’t find Chuck Norris, he finds you.” The domain is now defunct.

Technically, Google bombing is still possible today, although it would be a tremendous effort to make it happen. Search engines are more prepared than ever to deal with a situation of this sort. Plus, it’s frowned upon and, of course, can be penalized.

3. User Experience didn’t matter

Imagine a most relevant website for a phrase you’re inputting in a search engine. All the information you need is there, it’s structured perfectly, there’s no redundancy in keywords used. Content-wise, it’s perfect.

However, once you click on it, the website takes forever to load, you receive an alert from your antivirus software regarding the security of the website, and a different ad pops up every 30 seconds. Do you still stay on the website for the sake of the information or do you angrily click the Close button?

Surprisingly, in the battle of rankings, one of the major influencers of User Experience – Page Speed – was not a concern for search engines until a decade ago. Google announced that they would be taking into account page speed when computing rankings in 2010. For mobile, that change happened 8 years later, in 2018.

Cut to May 2020 and Page Experience is announced as the newest, shiniest ranking signal for Google. It includes metrics such as loading times, interactivity, stability, safe browsing, and some others that we will delve into later.